这是一个创建于 1904 天前的主题,其中的信息可能已经有所发展或是发生改变。

这两天在尝试复现一篇论文,但是在嵌入层的尺寸这里出现了问题

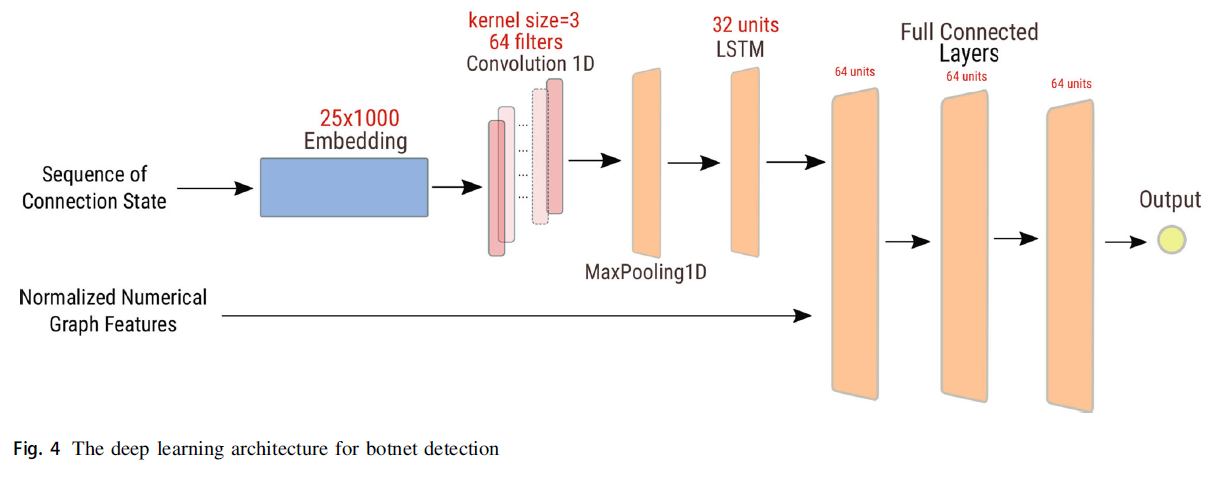

论文中说,嵌入层向量的尺寸为 25*1000,想知道这个 size 指的嵌入层中使用的矩阵的尺寸吗?可能是我看论文太少没见过这种说法... 论文嵌入层部分的描述

From the sequence of connection states toward the output of the deep learning architecture, the first block is the embedding function, and it maps and associates a numeric vector with every connection state, which belongs to the connection array between two nodes. ** Embedding leverages the distance function calculating between any two vectors to capture the relationship between the two correlated connection states. The matrix formed by these vectors is called embedding whose size is 25 × 1000. **

2 条回复 • 2020-10-16 08:05:03 +08:00

1

helloworld000 2020-10-16 06:50:02 +08:00

是啊,embedding 就是一个 hidden layer + softmax,那个 hidden layer 就是你 embedding 要得到的那个 weight matrix

|

2

thinszx OP @helloworld000 如果嵌入层的权重矩阵尺寸确定为 25*1000 的话,应该意味着我的输入矩阵的宽也被确定为 25 了,我这样理解对吗?如果是这样的话我的去看看我的数据处理是不是有什么问题(゚ o ゚;

|